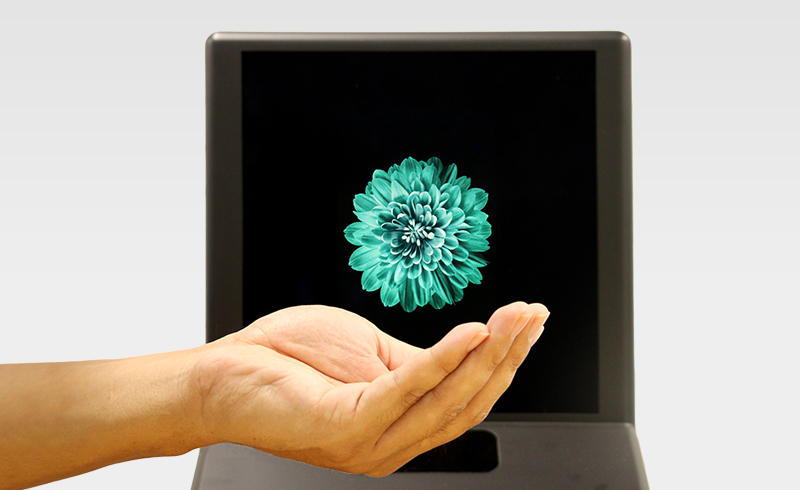

High-Definition Aerial Display

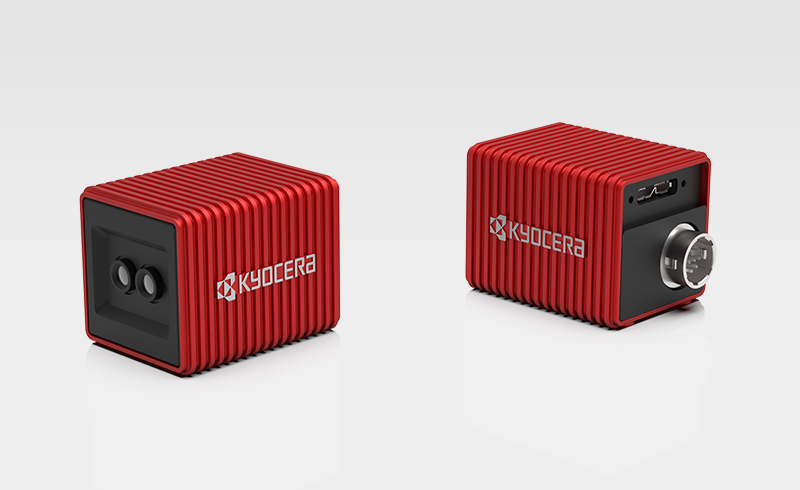

Kyocera’s Aerial Display, a blend of miniaturization, high resolution, and next-generation image quality, can project highly realistic, floating images. Curved mirrors maximize light efficiency, producing a high-resolution display with low power consumption. The interactive display utilizes various sensors for non-contact operation, unlike any current display. Kyocera’s booth will showcase a futuristic automotive navigation demonstration utilizing this cutting-edge aerial display technology.